Recent research from Gartner revealed that more than three quarters of companies are investing or planning to invest in big data by 2017. As with the adoption of any new technology, the journey to properly leveraging data is a long and challenging one – just 0.5 percent of data is ever analysed or used. However many are now beginning to understand the critical role data and analytics will play to the future of successful companies; The Economist’s Intelligence Unit recently found the majority of business leaders believe data is “vital” to their organisation’s profitability.

As adoption and the understanding of what data can and should achieve grows among businesses, data is shifting from a vision to a reality for many. It has gone from a buzzword to business practice.

[easy-tweet tweet=”#Data has gone from a buzzword to business practice.” hashtags=”cloud, tech”]

At Tableau, we have first-hand insight into how businesses are working to capture and analyse data. Customers that span nearly every industry and vary from small businesses to enterprise corporations use Tableau Online to connect to and analyse their data each with their own unique data sets and objectives. Their data is stored in a wide variety of places—from individual databases, to cloud, on-premise, and hybrid deployments.

In an effort to help businesses navigate this diverse landscape, we’ve developed the ‘Cloud Data Brief’, which analyses the usage patterns of over one million anonymous data source connections published to Tableau Online by more than 4,000 customers.

This brief identifies emerging trends that we believe are representative of the wider industry and that all organisations must consider when developing a data strategy.

Data’s centre of gravity is moving to the cloud.

Cloud is revolutionising IT, and this is particularly true of data. As the size, scale and complexity of data grows, cloud has proved the ideal platform on which to host it, empowering businesses to easily capture and store their data in cloud databases and Hadoop ecosystems.

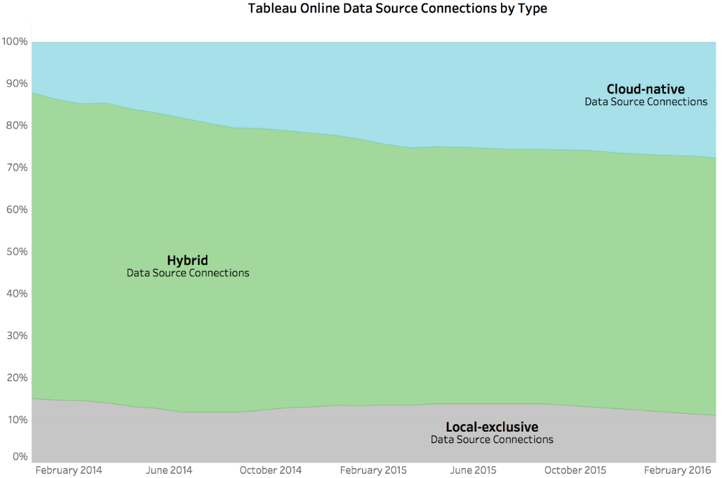

Using Tableau as an example, we saw a 28 percent increase in usage of cloud-hosted data within Tableau Online over the past 15 months. By the first quarter of 2016, nearly 70 percent of our customer data was hosted in the cloud. This marks a noticeable shift. To put things into perspective, just last year the split was almost even at 55-45 cloud to on-premise deployment.

[easy-tweet tweet=”Cloud is revolutionising IT, and this is particularly true of data.” hashtags=”tech, cloud”]

Data gravity indicates the pull of data on services and applications. If your data lives in the cloud, for example, you’ll likely want your data tools – from processing to analytics – running in the cloud as well. Data’s centre of gravity is now squarely focused on the cloud, and that focus will only grow larger in the future. Organisations building data ecosystems should concentrate their efforts on cloud workflows to ensure they’re system are ready for this change in data gravity.

In the move to cloud, hybrid data technologies are becoming the norm

Hybrid databases can be deployed on both cloud and on-premise networks. When not all your data can be moved to the cloud, or you want to make the move incrementally, hybrid options give the flexibility to bridge that gap between cloud-hosted and on-premise environments.

Gartner recently predicted these hybrid offerings will become the norm by 2018. For businesses transitioning to the cloud, the Brief reveals however, that for Tableau’s customers in particular hybrid is already the norm.

The reason for this is straightforward. As established businesses move their operations to the cloud, they often find themselves with data that must remain on-premise. Security and privacy requirements, for example, can necessitate storage behind corporate firewalls. Other times, moving operations to the cloud is a slow and incremental process achieved over months or years. These scenarios are driving demand for hybrid solutions.

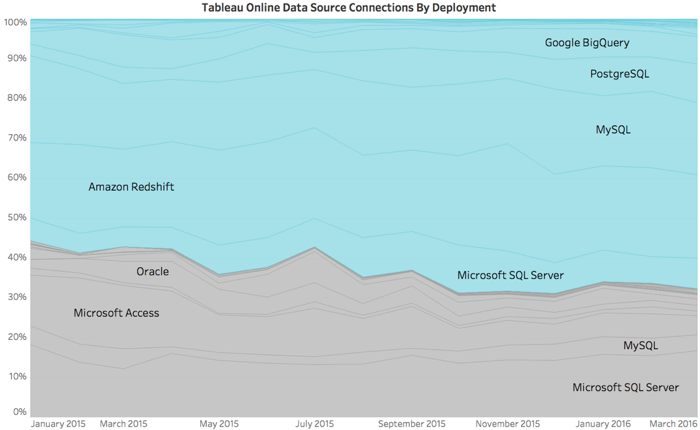

Returning to our data as an example, the most popular hybrid databases used in Tableau Online are SQL Server, MySQL, and PostgreSQL. In the first quarter of 2016, these three databases accounted for over half of all data sources used by our customers.

Meanwhile, connections to cloud-native data sources like Amazon Redshift and Google BigQuery are rapidly gaining market share. At the beginning of 2014, they represented just 12 percent of all connections in Tableau Online. By the first quarter of 2016, they had grown to 28 percent.

[easy-tweet tweet=”Cloud-native data accounts for only a quarter of connections used in Tableau.” hashtags=”cloud, data, tech”]

Even with such consistent growth, however, cloud-native data sources account for only a quarter of all connections used in Tableau Online. Hybrid sources, on the other hand, have never dropped below 60 percent.

Data storage is expanding beyond our traditional concepts of databases and businesses are analysing data from many sources.

With the rise of the Internet of Things (IoT), data is now flowing from everywhere and everything. As a result, the capture and storage landscape has expanded to meet the requirements of new and variable streams of data.

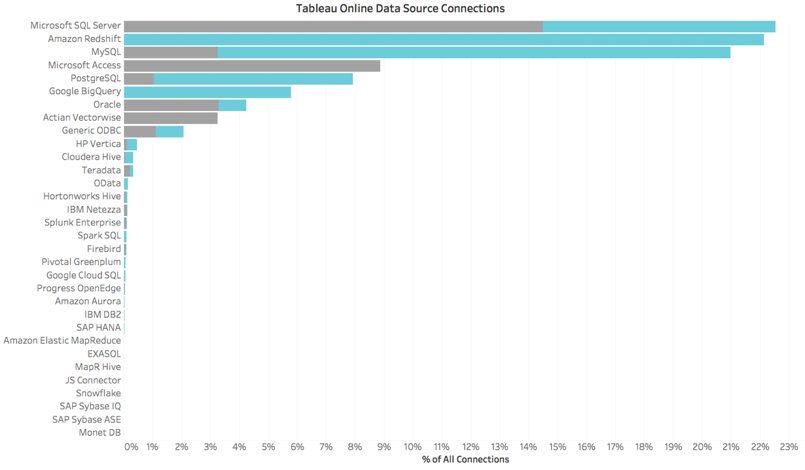

There are over 40 types of data sources represented in Tableau Online. Even when file-based sources like Excel and web applications like Google Analytics are excluded, there are 32 distinct types of databases and Hadoop ecosystems represented. This diversity is indicative of the wide and varied landscape of data tools available today. For instance, newcomers like Snowflake are rethinking the delivery of databases, while hosted solutions like Amazon Elastic MapReduce have extended the capabilities of traditional Hadoop ecosystems.

In the future, it’s almost inevitable the landscape will only become more crowded. There’s a clear need for businesses need to connect their analytics tools to not one, but many data sources that span across databases, Hadoop ecosystems, and web applications.

For organisations looking to capitalise on the breakneck speed of innovation in the data landscape, it is necessary to build a data workflow that focuses on flexibility and choice above all else.

James Eiloart is Tableau Software’s Senior Vice President EMEA.

With 26+ years in the software industry, leading international sales teams and building strong partner ecosystems, James has held executive positions in strategic alliances, global and channel sales, and marketing. Prior to Tableau, James was SVP global sales at Alterian building and running sales and marketing for both EMEA and Asia Pacific. He has also served in executive positions at E.piphany, Remedy, Compuware and Pansophic Systems. James is a graduate of the University of Leeds and holds an BSC (Hons) in data processing.